Is Lidar merely a sixth sense for automated cars?

Add bookmarkEven before the tragic May 2016 accident involving a Tesla Model S and a Semi Trailer, the use of Lidar had generated heated debate within the industry:

- Quite simply, the consensus view was that Lidar was not merely a nice-to-have, but was the best method of rapidly and accurately mapping the environment, while offering crucial system redundancy.

- Taking up the contra position, Tesla’s Elon Musk was quoted on the Teslarati website in July 2016 as having said: “I don’t think you need Lidar … you can do this all with passive optical and then with maybe one forward radar. I think that completely solves it without the use of Lidar. I’m not a big fan of Lidar, I don’t think it makes sense in this context.”

Notwithstanding Musk’s stated case against Lidar, many observers believe the technology used for creating an accurate, real-time 3D map of a vehicle’s surroundings is indispensable to any future self-driving program.

Referring to this capability, industry experts on self-driving cars have on several occasions argued that the Model S crash into the white tractor trailer-against a brightly lit sky, could have been averted if Lidar technology was incorporated into the Autopilot that was engaged at the time.

Furthermore, it’s argued that as vehicles move to a higher level of autonomy they will require more redundancy, which Lidar provides.

What makes Lidar so important for self-driving cars?

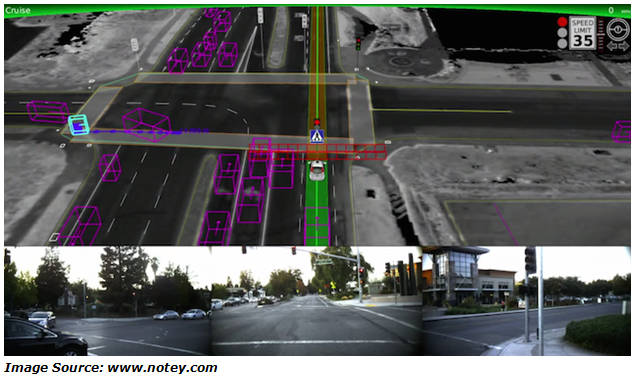

As is the case with human drivers, automated vehicles function by building 3D maps of their environment relative to their position – although humans do this intuitively in their subconscious. In this capacity Lidar, first used by the National Center for Atmospheric Research to map out cloud formations, is ideal with its ability to accurately calculate distances in real time.

For example, the Velodyne HDL-64E fitted to the original “Google car” cost about $75,000 and used 64 lasers and 64 photodiodes to scan the world, creating 64 "lines" of data output with a maximum range of between 100 to 150m.

To reduce the extreme costs of the system Velodyne has recently released the $8000 “Puck” which only has 16 channels; so while it is cheaper and less complex, the resolution is also much lower.

And the Lidar system cost can be reduced even further, to something cheap enough to fit in sub-$1000 consumer devices like the Lidar-guided Neato Botvac robotic vacuum, which uses a single-laser system for a 2D view of the world; but at what point does the system become too low resolution to be useful for a self-driving car?

Waymo, for one, seems to favor a higher-detail view of the world, claiming that the detail captured by their in-house developed Lidar is so high that, not only can they detect pedestrians around the car, but they can also detect the direction they’re facing. And this is the true value of Lidar: The ability to harvest an immense amount of data regarding a changing environment; such as predicting the direction in which a pedestrian may move next.

The problem with Lidar is that it generates a large amount of data and is still too expensive for OEMs to implement.

Also, while Lidar works well in all light conditions, it starts failing with increases in snow, fog, rain, and dust particles in the air due to its use of light spectrum wavelengths. Lidar also cannot detect colour or contrast, and cannot provide optical character recognition.

So taking Lidar’s shortcomings into account it’s apparent that, while very important, it has several challenges that other sensors can overcome when fused into an automated driving system.

Are there viable alternatives to Lidar?

Radar is the master of motion measurement and uses radio waves to determine the velocity, range and angle of objects. Radar is computationally lighter than a camera and uses far less data than Lidar. While less angularly accurate than Lidar, radar can work in every condition and even use reflection to see behind obstacles. Many self-driving prototypes currently use radar and Lidar to “cross validate” what they’re seeing and to predict motion.

And although Ultrasonic sensors have very poor range they are excellent for very-close-range three-dimensional mapping, and because sound waves travel comparatively slowly, they can detect differences of a centimetre or less. They work regardless of light levels and, due to the short distances, work equally well in conditions of snow, fog, and rain. But like Lidar and radar, they do not provide any colour, contrast or optical character recognition capabilities. Also, due to their short range, they aren’t useful for gauging speed, but they are small and cheap.

Cameras, on the other hand, lead the way in classification and texture interpretation. By far the cheapest and most available sensors, cameras use massive amounts of data (full HD means millions of pixel or Megabytes for every frame), making processing a computational intense and algorithmically complex job.

Camera image recognition systems have become very cheap, small, and high-resolution in recent years. They are less useful for very close proximity assessment than they are for longer distances. Their color, contrast, and optical-character recognition capabilities give them a capability-set entirely missing from all other sensors. They have the best range of any sensor but only when the environment is well lit.

Digital signal processing makes it possible to determine speed, but not at the level of accuracy of radar or Lidar systems.

So how much emphasis should be placed on the quality of information returned by the sensors: Is the lower acuity of radar adequate to identify the majority of objects and vehicles, allowing the car to proceed faster with safety than if a human were driving? Or is the accuracy too low, with speed having to be reduced to allow the other sensors to gather enough information to respond in time?

Can level 3 and 4 automated vehicles operate safely without costly Lidar?

Tesla assessed these factors, and presumably more, and arrived at the decision that Lidar was not required for an effective full-sensor set. The current set of sensors on a Tesla probably has a hardware cost in the same range as a single next-generation, not-yet-production, solid-state Lidar sensor, but can provide excellent performance under most conditions.

What it would appear to lack is good resolution imaging under adverse lighting conditions, where Lidar has an edge over lower-resolution radar and camera image recognition systems.

Nevertheless, regardless of whether Lidar is required or not, no solution based on a single-sensor set or even a dual-sensor set is likely to ever be viable.

Each sensor type has strengths and weaknesses and amalgamating a single representation of reality from multiple sensors is required in order to avoid false positives and also false negatives.

In search of a cost effective safe automated-driving system Delphi and Mobileye, recently teamed up to develop a near-complete autonomous driving system by 2019. The plan is to create a mass-market, off-the-shelf system that can be plugged into a variety of vehicle types, from smaller cars to SUVs.

Mobileye Chairman and chief technology officer Amnon Shashua said the CSLP system would be radar- and camera-centric (Similar to the system Mobileye developed with Tesla), but with Lidar operating as a redundant sensor. This will help keep the cost of the system down to only a few thousand dollars.

So to answer the question: Yes, in a well-engineered system such as the Mobileye/ Delphi or Tesla designs Lidar can be regarded as a sixth sense, an insurance against something going wrong, but one that I would want in any self-driving car parked in my garage.

Sources:

- Ron Amadeo; ARS Technica; Google’s Waymo invests in LIDAR technology, cuts costs by 90 percent; January 2017; https://arstechnica.com/cars/2017/01/googles-waymo-invests-in-lidar-technology-cuts-costs-by-90-percent/

- Paul Lienert, Alexandria Sage; Reuters; Tesla crash raises stakes for self-driving vehicle startups; July 2016; http://www.reuters.com/article/autos-selfdriving-investment-idUSL1N19X1SW

- Michael Barnard; Clean Technica; July 2016; Tesla & Google Disagree About LIDAR — Which Is Right?; https://cleantechnica.com/2016/07/29/tesla-google-disagree-lidar-right/